Load balancing is a technique that can distribute work across multiple server nodes. There are many software and hardware load balancing options available including HAProxy, Varnish, Pound, Perlbal, Squid, Nginx and so on. However, many web developers are already familiar with Apache as a web server and it is relatively easy to also configure Apache as a load balancer.

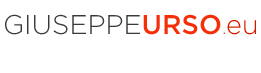

In this post let’s see how configuring a load balancer in front of a couple of Tomcat Server using the Apache HTTP Web Server and the mod_jk connector. Furthermore I set sticky_session to True so that a request always gets routed back to the node which assigned thie same jSessionID. Here the high level architecture.

Application Stack.

– Operative System: CentOS 6.x 64bit

– Apache Web Server: 2.2.15

– Tomcat Server: 6

– Mod JK connector: 1.2.37

– JDK: 1.6

STEP 1. Configure Tomcat Instance 01 – IP 10.10.1.100

# Define the jvmRoute for Tomcat 01 instance $ vi Tomcat_home/conf/server.xml:

<Server port="8005" shutdown="SHUTDOWN">

...

<Service name="Catalina">

...

<!-- Define an AJP 1.3 Connector on port 8009 -->

<Connector port="8009" protocol="AJP/1.3" redirectPort="8443" />

...

<Engine name="Catalina" defaultHost="localhost" jvmRoute="tomcat01">

...

</Engine>

</Service>

</Server>

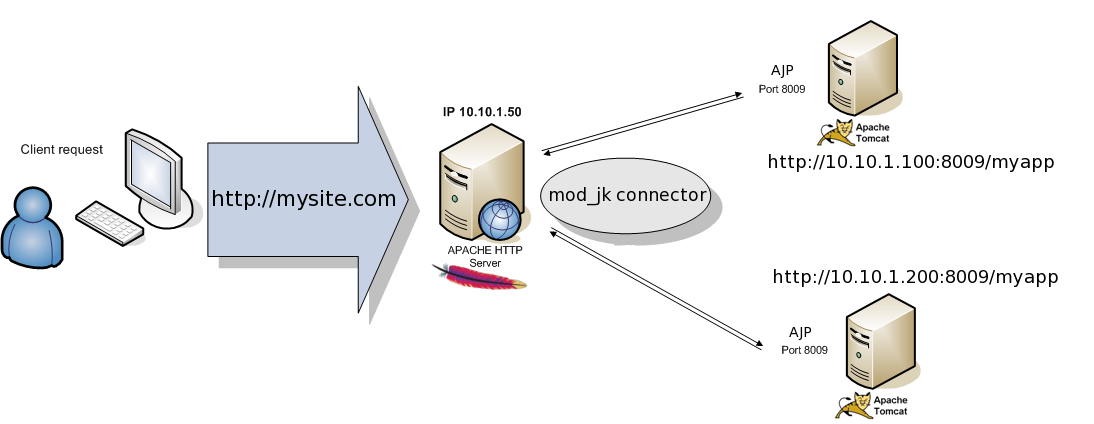

Create a sample jsp page to test the http session over the current tomcat instance.

$ vi tomcat_home/webapps/test-balancer/index.jsp

<html>

<body>

<%@ page import="java.net.InetAddress" %>

<h1><font color="red">Session serviced by NODE_01</font></h1>

<table align="center" border="1">

<tr>

<td>

Session ID

</td>

<td>

<%= session.getId() %></td>

</td>

<% session.setAttribute("abc","abc");%>

</tr>

<tr>

<td>

Created on

</td>

<td>

<%= session.getCreationTime() %>

</td>

</tr>

<tr>

<td>

Hostname:

</td>

<td>

<%

InetAddress ia = InetAddress.getLocalHost();

out.println(ia.getHostName());

%>

</td>

</tr>

</table>

</body>

</html>

STEP 2. Configure Tomcat Instance 02 – IP 10.10.1.200

Take care to the jvmRoute property.

# Make the same above settings using a new jvmRoute definition and a second jsp test page $ vi Tomcat_home/conf/server.xml:

<Server port="8005" shutdown="SHUTDOWN">

...

<Service name="Catalina">

...

<!-- Define an AJP 1.3 Connector on port 8009 -->

<Connector port="8009" protocol="AJP/1.3" redirectPort="8443" />

...

<Engine name="Catalina" defaultHost="localhost" jvmRoute="tomcat02">

...

</Engine>

</Service>

</Server>

Create a sample jsp page to test the http session over the current tomcat instance.

$ vi tomcat_home/webapps/test-balancer/index.jsp

<html> <body> <%@ page import="java.net.InetAddress" %> <h1><font color="red">Session serviced by NODE_02</font></h1> <table align="center" border="1"> ....

STEP 3. Setup the Apache Web Server and mod_jk – IP 10.10.1.50

# Setup Apache and some required libraries required to compile the mod_jk source code. $ yum install httpd httpd-devel apr apr-devel apr-util apr-util-devel gcc gcc-c++ make autoconf openssh*

# Download mod_jk source $ wget http://apache.fis.uniroma2.it/tomcat/tomcat-connectors/jk/tomcat-connectors-1.2.37-src.tar.gz

# Compile source and install the mod_jk $ tar xzvf tomcat−connectors−1.2.32−src.tar.gz $ cd tomcat−connectors−1.2.32−src/native $ ./configure --with-apxs=/usr/sbin/apxs ] $ make $ make install

# Configure mod_jk properties and VirtualHost definition $ vi /etc/httpd/conf.d/mod_jk.conf

LoadModule jk_module "/usr/lib64/httpd/modules/mod_jk.so" JkWorkersFile "/etc/httpd/conf.d/worker.properties" JkLogFile "/var/log/httpd/mod_jk.log" JkLogLevel emerg NameVirtualHost *:80 <VirtualHost *:80> ServerName mysite.com JkMount /* bal1 RewriteEngine On </VirtualHost>

#Configure the balancer workers $ vi /etc/httpd/conf.d/worker.properties

worker.list = bal1,stat1 worker.bal1.type=lb worker.bal1.sticky_session=1 worker.bal1.balance_workers=tomcat01,tomcat02 worker.stat1.type=status worker.tomcat01.type=ajp13 worker.tomcat01.host=tomcat-host01 worker.tomcat01.port=8009 worker.tomcat01.lbfactor=10 worker.tomcat02.type=ajp13 worker.tomcat02.host=tomcat-host02 worker.tomcat02.port=8009 worker.tomcat02.lbfactor=10

Setup the dns entries in the /etc/hosts file of the machines. You should also have the following first entry on the client machine where you are planning to open browser for the final test.

vi /etc/hosts 10.10.1.50 mysite.com 10.10.1.100 tomcat-host01 10.10.1.200 tomcat-host02

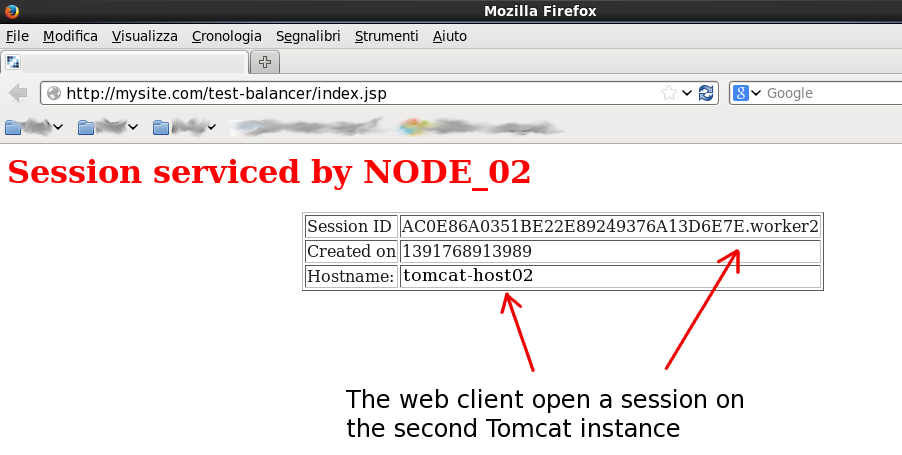

Finally test Load Balancer using the two sample jsp pages. To be sure to open a new http session, you must clear the cache from your browser then update the web page. Open your browser and go to url http://mysite.com/test-balancer/index.jsp. You can see the response coming from one of the two Tomcat instances.

Clear the cache browser then update the web page more times until a new session over the second Tomcat instance is opened. Here the page containing the new session on the secon Tomcat instance.

English

English Italian

Italian

Dobrze wyjaśniona kwestia konfiguracji bez zbędnych kwestii. Przyda się, gdy będę robił balansowanie dla moich aplikacji.

Hi Kamil,

your comment seems written in Polish language. Can you please rewrite it using the English language ?

Giuseppe

Well explained matter of configuration without unnecessary issues. It will be useful when I was doing a balancing act for my application.

Oh thanks for your comment!

I written an essential balancing tutorial without verbose descriptions.

Glad to hear it’s useful!

Hi Urso,

Thanks you for your guide .

In the guide above, you use 2 tomcat server on the 2 server physical diffirence with a port 8009 ( port ajp default ) .

Can you design a http – tomcat architrcture uses 2 tomcat on the a Server phisical ?

Hi letrung,

multiple Tomcat instances into the same phisycal server is not a good practice for production environments. You must consider the missing of High Availability and all risks related to a single point of failure (the single server). In addition, Tomcat hosts java-based applications and java has a significative resources consumption (ram and cpu utilization). This forces you to consider and planning a java tuning activity (hard if you have not any experience of java applications).

However if you want to install two Tomcat instance into the same physical server, you must specify alternative ports for the second Tomcat instance to avoid process conflicts. The configuration file is conf/server.xml, you have to change these ports:

– server port for shutdows [default=8005]

– server endpoint port [default=8080]

– server ajp port [default=8009]

– server SSL port [default=8443] (if enabled)

In this case, pay attention to the Apache mod_jk configuration. You must update the worker.properties and the /etc/hosts, for example:

worker.node01.host=tomcat-host01

worker.node01.port=8009

worker.node02.host=tomcat-host02

worker.node02.port=20009

10.10.1.100 tomcat-host01 tomcat-host02

Giuseppe

I would be very interested in removing the last single-point-of-failure in this architecture – the client-facing Apache server. With appropriate DNS configuration, we can have multiple servers responding to the same external hostname (“mysite.com”); which results in multiple load-balancers. Can these successfully address a shared “pool” of worker nodes, rather than separate pools or clusters?

The main issue seems to be how the load-balancers then know the state of the workers. With a single load-balancer, the load-balancer itself knows how loaded the worker is. With multiple load-balancers, some communication, collaboration, or feedback would be required.

Would a “status” node provide this? Examples I have seen do not illuminate this scenario. A status task must run alongside each load-balancer, and somehow determine the status of all worker nodes independantly of what its load-balancer is assigning. This should be do-able, but a definitive statement is proving hard to find…

Thanks Simon for your comment,

I’m not sure I well understood your issue. Could your problem be related to the requests state keeping (session) from/to the tomcat hosts? With Apache+mod_jk you resolve this kind of issue. If sticky_session property is set to True, sessions are sticky and the requests are preserved from Apache to the worker nodes (and viceversa). The sticky_session property specifies whether requests with SESSION ID’s should be routed back to the same Tomcat worker.

When a request first comes in from an HTTP client to the load balancer, it is a request for a new session. A request for a new session is called an unassigned request. The load balancer routes this request to an application server instance in the tomcat group according to a round-robin algorithm.

Once a session is created on an application server instance, the load balancer routes all subsequent requests for this session only to that particular instance.

I think that If you have configured multiple Apache web servers to respond to the same DNS “example.com”, you have already done the hardest part.

With Apache+mod_jk and sticky_session=true your load balancer, after routing a request to a given worker, will pass all subsequent requests with matching sessionID values to the same worker. In the event that this worker fails, the load balancer will begin routing this request to the next most available server that has access to the failed server’s session information.

If you have multiple Apache web servers the result is the same. Here is what I image:

LAYER 01 (client side)

– mysite.com unique DNS —> multiple hosts: 10.10.10.11 (apache-01), 10.10.10.12 (apache-01), ….N.N.N.N(apache-N)

LAYER 02 (apache layer)

10.10.10.11 (apache-01) –> (mod_jk + sticky_session=true) –> worker1=tomcat1, worker2=tomcat2,…M

10.10.10.12 (apache-02) –> (mod_jk + sticky_session=true) –> worker1=tomcat1, worker2=tomcat2,…M

…..

N.N.N.N (apache-N) –> (mod_jk + sticky_session=true) –> worker1=tomcat1, worker2=tomcat2,…M

LAYER 03 (tomcat layer)

tomcat1 —> sessions are sticky

tomcat2 —> sessions are sticky

….

tomcat-M

A last note: using the worker’s load-balancing factor (worker.node[N].lbfactor=X), you perform a weighed-round-robin load balancing where high lbfactor means stronger machine (that is going to handle more requests).

I hope this will help you.

Giuseppe

Hi,

I have done apache tomcat load balancing before. But in a big production server. I have two physical server and each server holds 12 tomcat instance. And one of the server holds the apache server. As it is a registration system, during the registration period we got very large number of concurrent request in the server. It can be above 50K request. And my experience shows that with that large request my server fail to show its normal behavior. Many request failed and many request wait for forever. And those who gets the response , take a long time for them. My server configuration is moderate. I have amost 64 GB RAM and much disk space.

Can somebody guide me what can be the best approach to load balancing in such a situation ?

Hi jim,

tuning and systems optimization are based on years of designing and performance experience. Symptoms of a problem can have many possible causes in a distribuited architecture like yours.

Have you identify the part of the system that is critical for improving the performance? What is the bottleneck?

You should consider a numbers of components:

– Apache layer

– Tomcat layer (response time, Java tuning)

– Database

– CPU

– Disk I/O

– Netwok

If you think Apache is the bottleneck, you should consider a web server benchmarking in order to find if the server can serve sufficiently high workload. The most effective way to tune your sistem is to have an established performance baseline that you can use for comparison if a performance issue arises. For example, you can start identifying the peak periods by installing a monitoring tool that gathers performance data for those high-load times

For a Web server benchmark you should consider:

– number of requests per second;

– latency response time in milliseconds for each new connection or request;

– throughput in bytes per second (depending on file size, cached or not cached content, available network bandwidth, etc.).

A performance testing on a web server can be performed using tools like Httperf, Apachebench, JMeter.

Giuseppe

Can the jvmRoute string in server.xml be the same string as the local host’s hostname?

Hello Matiew,

yes of course. In the article I’ve only used example names for the jvmRoute property. You can canfigure any string you want, the important thing is that match the value you set in the balance_workers property:

worker.bal1.balance_workers=tomcat01,tomcat02

Thanks for your comment

Giuseppe

There’s probably mistake in your jk_workers

worker.bal1.balance_workers=tomcat01,tomcat02

But the workers are called node01 and node02, not tomcat01 and tomcat02

Thank you Gerard for the comment.

What you say is right. Worker names are “tomcat01” and “tomcat02”. I’ve updated the properties file.

Thanks again

Giuseppe

Hello,

I’m new to Tomcat and I have a few questions:

– Is this a workable setup: 2 Servers (1 has Apache http and Tomcat and another with Tomcat) or should Apache be on its own server?

– Is there a rule of thumb regarding server specs? CPU, RAM, Etc.

Hello

It doesn’t matter to have multiple servers. You can also install both Apache and the two Tomcat services on the same machine. But remember that if that server fails, will stop the entire system from working (single point of failure). This is a common use for a development environment as opposed to a production environment where a goal of high availability or reliability should be achieved.

It depends on the volume of traffic that you are expecting. You can test and tune your system by using benchmark tools like httperf, jmeter and so on. Start with a initial hardware configuration (let say 2 GB RAM and 2GHz CPU). Check the performance of your system then upgrade it according to your needing. This should help you: http://www.giuseppeurso.eu/en/http-load-testing-using-apachebenchmark-and-httperf/